Télécharger le fichier pdf d’un mémoire de fin d’études

Real-Time processing for data stream

Recently a new type of data-intensive computation mode has been widely recognized: applications here the data is not processed as persistent static relations but rather as transient dynamic data streams. These applications include financial analysis, network monitoring, telecommunications data management, traffic data monitoring, web applications, manufacturing, sensor networks, and so on. In this model, individual data items are represented by a record with a time-stamp. The data streams continuously arrive in multiple, rapid, time-varying, unpredictable and unbounded manners.

For this type of data, the shortcoming and drawbacks of batch-oriented data processing are obvious. Real-time query processing and in-stream processing is the immediate need in many practical applications. An important feature for a data stream processing system is that it needs to cope with the huge dynamicity in data streams in the near future, both at the architecture and the application level. At the architecture level it should be possible to add or remove computational nodes based on the current load. At the application level, it should be able to withdraw old results and take new coming data into account. However, current parallel processing systems for big data, being tailored to optimal realization of predefined analytics (static monitoring), are lacking the flexibility required for sensing and dynamic processing of changes in the data.

That is why, in recent years, many solutions for stream processing like Twitter’s Storm [5], Yahoo’s S4 [19], Cloudera’s Impala [12], Apache Spark Streaming [21], and Apache Tez [22] appeared and joined the group of Big Data and NoSQL systems. Wherein Twitter Storm is the most successful and most widely used one.

Objectives and Contributions

The join operation is a popular problem in the big data area. It needs more than two inputs data sets, and outputs one or more required information. It has different kinds of applications, varying from kNN join (a join process for two data sets to find the k nearest neighbors for every elements in the query set) to semantic join (a join process in the semantic web area). It is a simple but not trivial and often used operation.

The challenges for designing a good parallel and distributed join method are:

• How to partition data and distribute the partitions to the computing cluster. Since more than one input data sets is involved, we need to make sure that the merge of the calculation of each partition is identical to the join of the whole data set.

• How to minimize the intermediate data communicated through network. Data transmission is unavoidable. So some clustering or classification method needs to be used to avoid unnecessary data transfers. Or some advanced data structures need to be used in order to reduce the size of data to be transferred.

More challenges for designing a good streaming join method are:

• How to withdraw old data.

• How to partition data when we do not have a global knowledge of the whole data set.

In this thesis, we are going to address the streaming join operation for data streams in a parallel and distributed way, both for data driven join and for query driven join, using two representative applications k nearest neighbor join and semantic join respectively.

The major contributions of this thesis are the following:

• A survey of all the method for processing k nearest neighbor join in a parallel way, including the pre-processing step, the data partitioning step and the main computation step. We analyze the performance in both a theoretical way and an experimental way.

• Design parallel processing of k nearest neighbor join to a continuous manner for processing data streams, after summarizing and analyzing all the technologies used for parallel processing kNN join, a parallel and continuous manner of processing kNN join on data stream is proposed. We separate the scenarios into 3 different categories, and we focus on two of them. We design data re-partition and re-computation strategies.

And we implement the methods proposed on Apache Storm in order to evaluate their performance.

• Design a parallel and distributed way to process semantic RDF joins, both for distributing data and for decomposing queries. We use Bloom Filters as the media to transfer intermediate data. This method minimizes the communication cost among nodes. We propose a Query Topological Sort method to determine the order of communications. We then extend this method to process RDF joins in a continuous way for streams. Data expiration and re-evaluation strategies are proposed. We analyze the performance in a theoretical way, for false positive rate, parameter tuning and efficiency. In the end, we implement the whole design on Apache Storm and design benchmarks in order to evaluate the performance.

Organization of Dissertation

This thesis studies the parallel and continuous join operation for data stream. Overall, this thesis is organized as follows:

• Chapter 2: introduces the state of the art of the related domains. It begins from the background of parallel computing, and firstly introduces two main forms of parallelism: data parallelism and task parallelism. Then it presents the most popular Big Data management systems, mainly systems based on the MapReduce paradigm, including Hadoop [2] and its improvements, Spark [3] and YARN [4]. The second part of the background introduces the concepts of stream processing. It first talks about the rules in data stream processing, followed by the introduction of the Sliding Window model and Sliding Window join. Then it analyzes the history about data stream management systems, focuses on the comparison of the 3 most used parallel streaming processing systems: Storm [5], Spark Streaming [21] and S4 [19]. In the end we detail the use of Apache Storm. Another important aspect of this Chapter is to introduce the use cases which will be presented in the following Chapters. Section 2.2.1 introduces the definition of k Nearest Neighbor algorithm, its applications and the traditional way of computing this algorithm. Section 2.2.2 presents the concepts of Semantic Web, including the purpose of Semantic Web, RDF data model and its corresponding query language SPARQL.

• Chapter 3: presents the techniques about processing data driven streaming join in a parallel and distributed manner. We choose kNN and its applications as our key use case to study. This Chapter begins with a short introduction about the methods we have evaluated, followed by an introduction of kNN join for centralized environment, parallel kNN join and continuous kNN join. In the main part of this Chapter, we first decompose the workflow of processing kNN in three steps: data preprocessing, data partitioning and main computation. Some corresponding methods are proposed for each steps. For data preprocessing, we introduce the pre-processing for reducing the dimension of data and to select the central points of data clusters respectively. For data partitioning, we discuss two different types of partition: size based partition which intends to gain a better load balance and distance based partition which tries to gather the most relevant data inside each partition. We separate the main computation steps into two types: the ones with an intermediate computation to get local results, and those which directly calculate the global results. We then theoretically analyze each method from the perspective of load balance, accuracy and complexity. In Section 3.4, we extend the parallel methods presented previously to adapt to a computation for streams. We separate the streaming scenario into three types. New strategies about re-partition and re-computation of the streams are proposed for each type respectively.

In the end, Section 3.5 presents an extensive experimental evaluation for each method, first on MapReduce and from the parallel point of view, then on Storm for evaluating the streaming characteristics.

• Chapter 4: introduces our techniques for processing query driven streaming join in a parallel and distributed manner. We choose RDF data and its query as our key use case to study. This Chapter begins with an introduction of the background and explains the motivation and the goal of this work, followed by a detailed state of the art on RDF processing technologies. In the main parts, we first describe query decomposition, and data distribution. We then explain our method for generating the query plan in 4 rules in Section 4.3. In Section 4.4, we extend the method proposed in Section 4.3 to work in a continuous way with sliding windows. We then analyze our methods in Section 4.5 from the aspects of main parameters of Bloom Filters, dominating parameters, and complexity points of view. The implementation issues are discussed in Section 4.6, where the algorithms for finding the join vertices, judging the category of join vertex, Query Topological Sort and Sliding Bloom Filters, are shown. Finally, we evaluate our method in Section 4.7 with both synthetic and LUBM [14] benchmarks.

• Chapter 5: reviews the contributions and presents some research and development perspectives that may arise from this thesis.

Background and Preliminaries

Background

Parallel Computing

Parallel computing is a computation model which uses two or more processors (cores or computers) in combination to perform multiple operations concurrently. The basic condition for parallel computing is that in general, a large problem can be divided into a limited number of smaller problems, and these small problems can be handled simultaneously.

Unlike the traditional serial computation, parallel computing uses multiple resources simultaneously to solve a problem. The problem should first be cut into a series of instructions, which will later be executed simultaneously on different processors. This model is much more suitable for explaining, modeling and solving complex real world phenomena. It does not only speed up the time spent to perform a large task, but also makes it possible to process large-scale data sets or complex problems which cannot be handled by a single machine.

In parallel computing, there are mainly two forms of parallelism:

– Data Parallelism

– Task Parallelism

We will introduce them separately in the coming two sections.

Data Parallelism

Data parallelism focuses on distributing data across different parallel computing resources, in which the same computation is applied to multiple pieces of data. This is usually used for data-intensive tasks. Fig. 2.1 shows the schematic of data parallelism.

In a data parallelism process, the data set is first divided into partitions, which will later be processed by different processors using the same task. This simple idea makes the storing and handling of big data possible. For example, Facebook has several million photos uploaded each day. But these photos are too large to be stored in a single machine. Then a data parallelism strategy is suitable for this problem.

However, because each machine only has a subset of data, gathering the results together is a problem that this model needs to address. At the same time, the main factor affecting the performance of this model is the transmission of intermediate data, hence reducing the amount of data to be transferred is another problem to face.

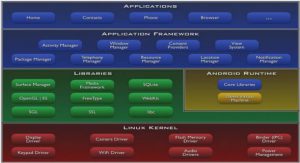

Since data parallelism emphasizes the parallel and distributed nature of data, when the size of data is growing, it is inevitable to use this model in parallel computing. Examples of Big Data frameworks that uses data parallelism are: Hadoop MapReduce [2], Apache Spark[3], YARN[4], and Apache Storm[5].

Task Parallelism

Task parallelism focuses on distributing tasks concretely performed by processors across different parallel computing resources, in which the same data (or may be different data in a hybrid system) is processed by different tasks. This is usually used for computation-intensive tasks.

In a task parallelism process, the parallelism is organized around the functions to be run rather than around the data. It depends on task decomposition. This idea makes it possible to handle a complex problem. For example, in a semantic join, task 1 needs to save the data which meets a certain condition in a specific data structure, and task 2 needs to use the data which meets another condition to probe this data structure. This process can be considered as a task parallel process. The difficulties of this type of process lies first on the decomposition of the work, specifically the decomposition of queries in a join process. Also task parallelism processes usually suffer from bad load balancing, since it is not easy to divide tasks with equal complexity. The communication among tasks is another problem. Synchronization is the most important communication approach in task parallelism processes, and can be divided into thread synchronization and data synchronization. Thread synchronization focuses on determining the order of execution in order to avoid Data Race Condition problems. Data synchronization is mainly used to ensure the consistency among multiple copies of data.

The most common task parallelism is pipelining. Suppose you have multiple tasks, task I, task II and task III, instead of having each one operating on the data independently, pipelining takes the data and first give it to task I, then task II and finally task III. Image processing often chooses to use a pipeline. Images are coming in a stream, some of the processing starts with the first task, and applies a certain filter on the images, then passes on to the second task, and so on. This is a very common combination of task parallelism and data parallelism. An example of pipeline processing of images is shown in Fig. 2.2.

Recently, the most popular task parallelism example is deep learning. Deep learning is a branch of machine learning which is based on a set of algorithms which attempt to model high-level abstractions in data by using multiple processing layers. The difference between deep learning and traditional machine learning is that in deep learning instead of having one model to train all the data, we separate the model into layers, and each layer can be considered as a sub-task of the whole model.

|

Table des matières

1 Introduction

1.1 Big Data Processing

1.2 Issues with Big Data

1.3 Real-Time processing for data stream

1.4 Objectives and Contributions

1.5 Organization of Dissertation

2 Background and Preliminaries

2.1 Background

2.1.1 Parallel Computing

2.1.1.1 Data Parallelism

2.1.1.2 Task Parallelism

2.1.1.3 Big Data Management Systems

2.1.2 Stream Processing

2.1.2.1 Rules in Data Stream processing

2.1.2.2 Sliding Window Model

2.1.2.3 Sliding Window Join

2.1.2.4 Data Stream Management System

2.1.2.5 Introduction to Apache Storm

2.2 Concepts of use cases

2.2.1 k Nearest Neighbor

2.2.1.1 Definition

2.2.2 Semantic Web

2.2.2.1 RDF data model

2.2.2.2 SPARQL Query Language

3 Data Driven Continuous Join (kNN)

3.1 Introduction

3.2 Related Work

3.2.1 kNN Join for centralized environment

3.2.2 Parallel kNN Join

3.2.3 Continuous kNN Join

3.3 Parallel kNN

3.3.1 Workflow

3.3.1.1 Data Preprocessing

3.3.1.2 Data Partitioning

3.3.1.3 Computation

3.3.1.4 Summary Work Flow

3.3.2 Theoretical Analysis

3.3.2.1 Load Balance

3.3.2.2 Accuracy

3.3.2.3 Complexity

3.3.2.4 Wrap up

3.4 Continuous kNN

3.4.1 Dynamic R and Static S kNN Join for Data Streams (DRSS)

3.4.1.1 Workflow for DRSS

3.4.2 Dynamic R and Dynamic S kNN Join for Data Streams

3.4.2.1 Basic Method

3.4.2.2 Advanced Method

3.5 Experiment Result

3.5.1 Geographic dataset

3.5.1.1 Impact of input data size

3.5.1.2 Impact of k

3.5.1.3 Communication Overhead

3.5.2 Image Feature Descriptors (SURF) dataset

3.5.2.1 Impact of input data size

3.5.2.2 Impact of k

3.5.2.3 Communication Overhead

3.5.3 Impact of Dimension and Dataset

3.5.4 Practical Analysis

3.5.4.1 H-BkNNJ

3.5.4.2 H-BNLJ

3.5.4.3 PGBJ

3.5.4.4 H-zkNNJ

3.5.4.5 RankReduce

3.5.5 Streaming Evaluation

3.5.6 Lessons Learned

3.6 Conclusion

4 Query Driven Continuous Join (RDF)

4.1 Introduction

4.2 Related Work

4.2.1 Centralized Solutions for Processing Static RDF Data

4.2.2 Parallel Solutions for Processing Static RDF Data

4.2.3 Continuous Solutions for Processing Dynamic RDF Data

4.3 Parallel Join on RDF Streams

4.3.1 Query Decomposition and Distribution

4.3.2 Data Partition and Assignment

4.3.3 Parallel and Distributed Query Planner

4.4 Continuous RDF Join

4.5 Analysis

4.5.1 Analysis of Bloom Filters

4.5.2 Dominating Parameters

4.5.3 Complexity

4.6 Implementations

4.7 Experiment Result

4.7.1 Experiment Setup

4.7.2 Evaluation about the 3 basic types of join Using Synthetic data

4.7.2.1 The evaluation of parallel performance

4.7.2.2 Impact of number of generations

4.7.2.3 Impact of number of Sliding Window Size

4.7.3 LUBM Benchmark

4.8 Conclusion

5 Conclusion and Future Work

5.1 Conclusion

5.1.1 Data Driven Join

5.1.2 Query Driven Join

5.2 Future Directions

5.2.1 Research Part

5.2.2 Use Cases

5.2.2.1 Real Time Recommendation System

5.2.2.2 Real Time Nature Language Processing System

Appendix A Papers published during this thesis

References

Télécharger le rapport complet