Network Protocols Architecture

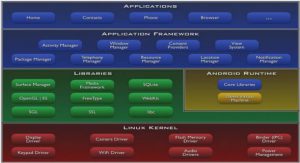

To reduce their design complexity, networks are organized as a stack of layers. The purpose of each layer is to over certain services to the higher layers, keeping the details of the actual implementation hidden. Therefore, a layer on one machine carries on a conversation with a corresponding layer on another machine. The set of rules and conventions used in this conversation are dened by this layer’s protocol. In Figure 1.1 we present a model of the Internet protocols’ layered architecture [99], as well as the classification of some protocols that we study in this thesis. In the following we describe the functions of each layer and we outline the operation of some protocols that will be studied in further detail in other chapters. We also comment on the thesis organization, which in fact follows the protocol stack.

Layer 1: The Physical Layer This layer is concerned with encoding and transmitting data over a communication channel. The physical layer protocols are in charge of moving the raw bits representing the data from one device to another.

Layer 2: The Link Layer The link layer protocols are responsible for forming and monitoring frames (ordered sequences of bits) transferred over a link connecting the sender and the receiver. Broadcast networks have an additional issue: how to control access to the shared channel. A special sublayer of the link layer, the Medium Access Control (MAC) sublayer, deals with this problem. An example of a layer 2 protocol for wired networks is Ethernet (cf. Section 5.1). As shown in Figure 1.1, the wireless protocols that we study in this work are situated in this layer too. In the next section we will describe the dierent types of wireless technologies and the corresponding protocol standards. In fact, the standards generally refer to physical as well as MAC/link layer specifications. However, in this thesis we are mostly interested in the MAC protocols. IEEE 802.11 is a layer 2 protocol that we will study extensively in Chapter 2.

Layer 3: The Network Layer This layer connects machines using possibly different technologies, creating the abstraction of a network. A key issue is determining how packets are routed from source to destination. The most important layer 3 protocol is the Internet Protocol (IP). IP denes an official packet format and identifies each machine with a unique identifier: the IP address. This addressing makes routing possible. Therefore, the OLSR routing protocol which we present in this chapter is a network layer protocol. IP also denes identiers for groups of machines, in order to allow multicast, i.e., one to many communication. Thus, we place multicast routing protocols in the network layer too. Due to the importance of routing, layer 3 considerations are present throughout the thesis when we study other layers. Conversely, this layer is our main focus in Chapters 3 and 4.

Layer 4: The Transport Layer At a basic level, this layer accepts incoming data from applications, fragments it into discrete packets and passes each one on to the network layer; at the other end it reassembles the received packets into the output stream. In fact, the transport layer is a true end-to-end layer, all the way from the source to the destination. In other words, a program on the source machine carries on a conversation with a similar program on the destination machine. Hence, the transport layer is responsible for distributing the right packets to to the right application. This distinction is done by adding to packets application-specic identiers, called ports. The two main layer 4 protocols in the Internet are UDP and TCP. UDP (User Datagram Protocol) is an unreliable, connectionless protocol. TCP (Transmission Control Protocol) overs more transport layer functionalities. It is a reliable connection-oriented protocol that allows a byte stream originating on one machine to be delivered without error on any other machine in the Internet. TCP also handles ow control to make sure a fast sender cannot swamp a slow receiver with more messages than it can handle. The TCP protocol will be described in more detail and analyzed in Chapter 5.

Layer 5: The Application Layer This layer contains the protocols that directly provide services to the users. One widely-used application protocol is HTTP (HyperText Transfer Protocol), which is the basis for Web browsing. Other examples of services are VoIP (Voice Over IP), video streaming etc. We will be concerned with how to manage and organize such services in Chapter 6.

Unified Packet Format

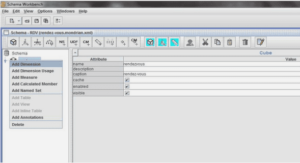

OLSR communicates using a unied packet format for all data related to the protocol. The purpose of this is to facilitate extensibility of the protocol without breaking backwards compatibility. This also provides an easy way of piggybacking different types of information into a single transmission, and thus for a given implementation to optimize towards utilizing the maximal frame-size, provided by the network. These packets are embedded in UDP datagrams for transmission over the network. Each packet encapsulates one or more messages. The messages share a common header format, which enables nodes to correctly accept and (if applicable) retransmit messages of an unknown type. In Figure 1.2 we depict the basic layout of any packet in OLSR (omitting IP and UDP headers). The Message Type eld indicates which type of message is to be found in the MESSAGE part. The VTime eld indicates for how long time after reception a node must consider the information contained in the message as valid. The Originator Address contains the main address of the node, which has originally generated this message.

Topology Dissemination

Each node maintains topological information about the network, obtained by means of TC (Topology Control) messages. TC messages are fooded in the network periodically taking advantage of MPRs to reduce the control traffic overhead. Furthermore, only MPR nodes have the responsibility to declare link state information in the network. In order to save more on control traffic, nodes have the possibility to advertize a small subset of their neighbor links. The advertized link set can be limited to MPR links, i.e., the neighbors that have elected this node as an MPR. In this case the nodes have only a partial knowledge of the network topology. The fact that any given node can compute a shortest path to any arbitrary destination comes from the fact that the node knows its own neighbor list. However there is an option to advertize additional information, as the whole neighbor list.

Distributed Coordination Function

The Distributed Coordination Function (DCF) is the fundamental access method used in the IEEE 802.11 MAC protocol. It is based on the Carrier Sense Multiple Access with Collision Avoidance (CSMA/CA) mechanism, which is designed to reduce the collisions due to multiple sources transmitting simultaneously on a shared channel. In the CSMA/CA protocol, a station transmits only if the medium is idle. The medium is considered as idle if it is sensed to be idle for a duration greater than the Distributed Inter-frame Space (DIFS). If the medium is sensed as busy, the transmission is deferred until the end of the ongoing transmission. When the medium becomes idle after a busy period, the node does not transmit immediately, because multiple stations could have been waiting for the end of the transmission and may attempt to access the channel again at the same time. Therefore, the node starts a random wait by initializing its back-off timer. The back-o timer is randomly selected in an interval called the contention window and has the granularity of one slot. Every time the channel is sensed to be idle, the back-o counter is decremented. When the counter reaches zero, the node can start its transmission. If the channel is sensed as busy during the backov procedure, the counter is frozen and then resumed when the channel becomes idle for a DIFS again. To make sure that a transmitted unicast frame has reached its destination, an Acknowledgement frame is generated from the destination to the source. This frame is sent after a time interval equal to a SIFS (Short InterFrame Space), which is shorter than a DIFS, eectively giving higher priority to Acknowledgement frames. The source node can detect lost frames if after a xed time interval, called AckTimeout, an acknowledgement has not been received. On the other hand, broadcast frames are not acknowledged, hence they are not retransmitted in case there is a collision. The channel access mechanism is summarized in Figure 2.1, where we depict the medium occupancy during a successful unicast frame transmission (i.e., where no collisions occur). The above carrier sense is called physical carrier sense because it is performed at the air interface. A virtual carrier sense is also possible in the DCF mode to resolve the problem of the hidden terminal. This problem occurs when two nodes that are not within hearing distance of each other create collisions at a third terminal that receives the transmission from both. The virtual carrier sense is performed at the MAC sublayer. The channel is reserved before each transmission, so instead of transmitting the data frame after sensing that the channel is idle, the station sends an RTS (Request To Send) frame to the destination. The receiver replies by a CTS (Clear To Send) frame, after which the data transfer can start.

Methodology Overview

A wireless node can be seen as a buer lled by incoming messages and with a single server that performs the CSMA/CA multiple access protocol. We model this system as an M/G/1 queue, i.e., we assume:

1. The input packet ow in the buer is Poisson of rate λ packets per slot;

2. Service delays are independent.

In fact, the M/G/1 hypothesis is just a matter of simplifying approach. Since we are going to deal with heavy tailed distribution of service times, the consequence on queueing time distribution can be generalized to a much larger class of queueing models. For example it is not necessary to assume independence between service times or to restrict to Poisson input in order to derive a power law queueing distribution (but in this case the coeffcients change). Nonetheless, as we verify later in the simulations section, the M/G/1 hypothesis leads to satisfactory results.

Collision Probability Estimation

The collision probability is estimated by OLSR, since this information is not currently provided by wireless cards. OLSR has a procedure in the advanced neighbor sensing option that allows to compute the collision rate of Hello messages (link quality level parameter, cf. Section 1.3.2). It uses the Hello message sequence number in order to identify the missing Hellos. However there could be a difficulty in the fact that the collision probability p(L) may depend strongly on packet length L. One may expect a dependence of the kind − log p(L) = aL + b where a and b are scalar coefficients. Since the neighbor has no idea of the size of missing Hellos, the transmitter should advertize the length distribution of its Hellos. Comparing with its received Hello distribution the neighbor would be able to determine the coefficients a and b. By default the neighbor assumes a = 0, i.e., all packets have the same collision rate regardless of their length.

Finding the Optimal Route

In general, finding the optimal route with respect to a delay distribution is NP hard [57]. But if we stick to the asymptotic expression, we can nd a polynomial Dijkstra like algorithm. The problem is to nd the route that provides the best asymptotic expansion of the quantity P(W(route) > T) when T → ∞. By best asymptotic expansion we mean the one that provides asymptotically the lowest P(W(route) > T). Since we expect that P(W(route) > T) is asymptotically equivalent to P i∈route c∗i T1−Bi (cf. Section 2.2.2), the idea consists in minimizing the sum of the leading terms of the one-hop delay distributions along the route. Hence, the routing algorithm is eectively a Dijkstra algorithm, where the weights on the links are c∗T1−B. Parameters c∗ and B are calculated according to the analysis in Section 2.2.2. The weight of the route is the sum of the weights of the links, and the optimal route is the route that minimizes this sum. When T is nite, the sum of the weights on a route gives an approximation of the end-to-end delay distribution within a factor 1 + O(T −B(route)), according to the asymptotic analysis. Since B(route) > 1, the algorithm is optimal within a factor 1 + O(T−1).

|

Table des matières

Preface

Thesis Organization and Contributions

1 Introduction to Wireless Network Protocols

1.1 Network Protocols Architecture

1.2 Wireless Network Technologies

1.3 Mobile Ad Hoc Networks (MANETs)

1.3.1 Routing in Mobile Ad Hoc Networks

1.3.2 Optimized Link State Routing (OLSR) Protocol

2 Delay Asymptotics and Routing in 802.11 Multi-hop Networks

2.1 IEEE 802.11 MAC Protocol

2.1.1 Distributed Coordination Function

2.1.2 Exponential Back-o Procedure

2.2 Asymptotic Delay Analysis

2.2.1 One-hop Delay Analysis

2.2.2 Multi-hop Delay Analysis

2.2.3 Simulation Results

2.3 Optimal Delay Based Routing

2.3.1 Cross-layer Delay Estimation Protocol

2.3.2 Delay Distribution Based Routing

2.4 Conclusion

3 Scalability Optimizations for Massive Wireless Networks

3.1 Neighborhood Management Optimization

3.1.1 Modeling Massively Dense Ad Hoc Networks

3.1.2 Minimizing the Number of Retransmissions

3.2 Scalability of Routing Protocols

3.2.1 OLSR Scalability

3.2.2 Fish Eye OLSR

3.2.3 Useful Capacity

3.3 Conclusion

Appendix: Factor λ in r(λ)

4 Multicast Scaling Properties in Large Ad Hoc Networks

4.1 Asymptotic Multicast Properties in Ad Hoc Networks

4.1.1 Multicast Cost Scaling Law

4.1.2 Capacity of Multicast Communication

4.1.3 Numerical Results

4.2 Specification and Simulation of MOST Protocol

4.2.1 Overlay Tree Construction

4.2.2 Specification of MOST Protocol

4.2.3 Implementation Overview

4.2.4 Simulation Results

4.3 Conclusion

5 Analysis of Traffic Autocorrelations in Large Networks

5.1 Autocorrelations Due to MAC Protocols

5.1.1 Throughput Analysis

5.1.2 Autocorrelation Analysis

5.2 Autocorrelations in TCP Traffic

5.2.1 TCP Protocol Overview and Models

5.2.2 Autocorrelation Function of a Single TCP Connection

5.2.3 Long Term Dependencies in Multi-user TCP

5.3 Conclusion

6 Replicated Server Placement with QoS Constraints

6.1 Problem Formulation

6.1.1 Optimization Problem Formulation

6.1.2 Problem Decomposition

6.2 Pseudopolynomial Algorithm

6.2.1 Pseudopolynomial Algorithm for Problem 1

6.2.2 Pseudopolynomial Algorithm for Problem 2

6.2.3 Pseudopolynomial Algorithm for Problem 3

6.3 Polynomial Algorithm

6.3.1 Polynomial Algorithms for Problems 1 and 2

6.3.2 Polynomial Algorithm for Problem 3

6.4 Numerical Results

6.5 Conclusion

Appendix: Proofs of the Lemmas

Conclusions and Perspectives

![]() Télécharger le rapport complet

Télécharger le rapport complet