Agents in data mining: Issues and benefits

Agent-based systems require a platform for agents to function and accomplish their tasks. It will increase the complexity of the who le system. Therefore, we should ask our selves if agents are the right technology for a given problem. In knowledge discovery, we are trying to solve very complex problems where the boundary of the problem domain isn’t properly defined (or not defined at ali). Mostly, the data mining techniques are used to define those boundaries by extracting meaningful correlation within data. And, the key issues of an agent-based system are the permission of incomplete knowledge about the problem domain and the possibility to build an intelligent and dynamic system [15]. Therefore, the system can reason, perform tasks in a goal driven way and react to a changing environment. Another advantage will be the possible solution to the problem using distributed systems since each agent encapsulates the behavior and the state. Ali these advantages are detailed in the following subsections. Scalability of DM and dynamic data gathering Our data mining system will be processing data from Bell ODM, which is a huge data repository. Also severa} thousands of operational data is inputted to this database every day. Processing ali data from this data mart at once is practically impossible and scaling up to this massive data set is a necessity. Therefore, we need to apportion the data between agents. The use of agents will facilitate dynamic selection of sources and data gathering. DM system will select and process data autonomously, without human intervention. Also, we can scale up (or scale down) number of agents processing data if required in future which is more difficult to accomplish with a static system without agents.

Multi-strategy DM As mentioned, we are doing descriptive data mining. A multi-strategy DM will be more suitable as we need to apply multiple data mining techniques and in sorne case combination of several techniques. As mentioned in [13], appropriate combination of multiple data mining techniques may be more beneficiai than applying just a particu1ar one. DM agents may learn in due course of their deliberative actions which one to choose depending on the type of data retrieved and mining tasks to be pursued. Multistrategy DM is an argument in favor ofusing agents, as mentioned in [13].

Interactive DM According to [13], pro-actively assisting agents drastically limits the amount a humanuser has to supervise and interfere with the running data mining process, e.g., DM agentsmay anticipate the individual limits of the potentially large search space and proper intermediate results. In [13], the authors treat the role of agents in distributed data mining (DDM). With DDM, the data, instead ofbeen centralized in a single repository, will be distributed over several data source. Therefore, besides the issues of scalability, DDM is also challenged with autonomy and privacy. The privacy is about the ensuring data security and protecting sensitive information against invasion by outsiders, which is not a concem for us because our data crawler system will be local and it will access directly a single data repository which is Bell’s ODM. Autonomy is an issue when the DM agents are considered as a modular extension of a data management system to deliberative! y handle the access to the data source in accordance with constraints on the required autonomy of the system, data and model. Once again, autonomy too isn’t a concem because our system is designed in accordance with Bell’s operational data. Our DM system will fit the client’s data because in order to have an automated DM system we need to focus on specifie clients domains [2].

PASSI Methodology Description

The Data Crawler System is designed and developed following the PASSI methodology. PASSI [ 40] (Process for Agent Societies Specification and Implementation) is a methodology for designing and developing multi-agent systems, using a step-by-step requirement-to code process. It integrates the design models and concepts, using the UML notation, from two dominant approaches in the agent community: 00 software engineering and artificial intelligence. P ASSI is composed of five process components also called ‘model’ and each model is composed ofseveral work activities called ‘phases’. P ASSI is an iterative method such as Unified Process and other widely acceptedsoftware engineering methodologies. The iterations are two types. The first is triggered by new requirements e.g. iterations connecting models. The second takes place only within the Agent Implementation Model every time a modification occurs and is characterized by a double level iteration. This model has two views: multi-agent and single-agent views. Multi-agent view is concemed with the agent society (the agents’ structure) in our target system in terms of cooperation, tasks involved, and flow of events depicting cooperation (behavior). Single agent view concems with the structure of a single agent in terms of attributes, methods, inn er classes and behavior. The outer lev el of iteration ( dashed arrows) is used to represent the dependency between single-agent and multi-agent views. The inner level of iteration, which is between Agent Structure Definition and Agent Behavior Description, takes place in both views (multi-agent and single agent) and it represents the dependency between the structure and the behavior of an agent, there is also a testing activity that is divided into two phases: (single) agent test and social (multi-agent) test. In single agent test, the behavior of the nagents is verified based on the original requirements of the system related to the specifie agent. During the social test, the interaction between agents is verified against their cooperation in solving problems. The models and phases ofPASSI are described in the following subsections.

System Requirements Model This model, as its name suggests, describes the system requirements in terms of agency and purpose and it is composed of four phases as follow:

• Domain Description

• Agent Identification

• Role Identification

• Task Specification

The Domain Description is a conventional UML use-case diagram that provides a functional description of the system. The Agent Identification phase is represented by stereotyped UML packages. The assignment of responsibilities to agents is done during this step by grouping the functionalities, described previously in the use-case diagram, and associating them with an agent. During the role identification, the responsibilities of the precedent step are explored further through role-specific scenarios using a series of sequence diagrams and the Task Specification phase spells out the capabilities of each agent using activity diagrams.

Agent Society Model This model describes the social interactions and dependency among agents that are identified in the System Requirements Model and is composed ofthree phases as follow

• Ontology Description

• Role Description

• Protocol Description

The Ontology Description is composed of Domain Ontology Description and Communication Ontology Description. The domain ontology tries to describe the relevant entities and their relationships and rules within that domain using class diagrams. Therefore, ali our agents will talk the same language by means of using the same domain ontology. In the Communication Ontology Description, the social interactions of agents are described using class diagrams. Each agent can play more than one role. The Role Description step involves of showing the roles played by the agents, the tasks involved communication capabilities and inter-agent dependencies using class diagrams. Plus the Protocol Description that uses sequence diagrams to specify the set of rules of each communication protocol based on speech-act performatives.

Agent Implementation Model This model describes the agent architecture in terms of classes and methods. Unlike object-oriented approach, there are two levels of abstraction: multi-agent level and single-agent level. This model is composed oftwo phases as follow

• Agent Structure Definition

• Agent Behavior Description

The structure of the agent-based system is described using conventional class diagrams and the behavior of the agents (multi-agent level and single-agent level) is described using activity diagrams and state diagrams.

Code Model This model is at code level and it requires the generation of code from the model using the PASSI add-in and the completing of the code manually.

Deployment Model This model describes the dissemination of the parts ofthe agent systems across hardware processing units and their migration between processing units and it involves the following phase:

• Deployment Configuration

The Deployment Configuration describes also any constraints on migration and mobility in addition to the allocation of agents to the available processing units.

RDF- Resource Description Framework

RDF framework is based on an entity-relationship model and it is used to model meta-data. It is the selected knowledge language used in P ASSI to describe the domain ontology. It is built on the rules below:

• A Resource is anything that can have a URI;

• A Property is a Resource that has a name and can be used as a property;

• A Statement consists of the combination of a Resource, a Property, and a value. These parts are known as the ‘subject’, ‘predicate’ and ‘object’ of a Statement;

• These abstract properties can be expressed in XML. Plus, RDF is designed to have the following characteristics:

• Independence: Since a Property is a resource, any independent organization (or person) can invent them.

• Interchange: Since RDF Statements can be converted into XML, they are easily interchangeable.

• Scalability: RDF statements are simple, composed of three elements (Resource,Property, value), as a result they can be handled easily even the number of statements getting larger in time.

DATA MINING METHODS SELECTION

In this section, instead of listing the selected DMTs we will explain the strategy for selecting data mining methods for the Data Crawler System. The selection strategy is the outcome of the analysis that we did during our methods selection process. The study mostly focused on the impact of high dimensionality of the dataset on the quality of the produced model with severa! clustering methods. This analysis is described in detail in document « Use of unsupervised clustering algorithm in high dimensional dataset » in APPENDIX2.Three clustering algorithms were used to conduct the analysis: PART algorithm, fuzzy c-means and k-means. The clustering algorithms were selected because they are unsupervised DMTs and descriptive data mining can be performed only with unsupervised DMTs. Those DMTs were applied on synthetic data similar to data from Bell’s ODM. The synthetic data was generated randomly. The data from Bell’s ODM weren’t used because we don’t have any valuable prior knowledge on it and a reference is needed to validate the produced clusters. In high dimensional data set two points will be far apart from each other on few dimensions only and on full dimensional space and on full dimensions the distance between every pair of points will be almost the same. Therefore, as it has been confirmed by our experimentation, most of the classical clustering methods will not be efficient for mining data from Bell’s ODM because of the sparsity of data in high dimensional space and ali dimensions are used by these DMTs. As seen with k-means and fuzzy c-means results, searching clusters in full dimensions can lead to erroneous results. Subspace clustering methods are an excellent way of mining high dimesional data.Before deciding on a DMT several points should be considered. For example, fuzzy cmeans and k-means need the number of searched clusters to be specified. In our case there isn’t enough prior knowledge to identify the number of searched clusters. Therefore, the following criteria should be considered in selecting a method for our system:

• Prior knowledge requirements and domain specifie strategy of DM methods: Prior knowledge requirements are the most important aspect to consider for choosing a method for data mining because of our lack of knowledge about data. For example, if the method to be selected requires any knowledge related to clusters such as number of clusters or number of dimensions (or the specifie dimensions) that form the clusters or any other information specifying the form of the clusters, the method should be rejected. Otherwise, the input parameters of the method should be identified and the value of the input parameters should be defined according to our data. The domain specifie strategy of data mining methods is the establishment of a strategy for defining the prior knowledge requirements of data mining methods according to input data space.

• Unsupervised, Descriptive data mining methods: The goal in building the data crawler system is to gain an understanding ofBell’s operations by uncovering patterns and relationships in ODM. In literature, this is know as descriptive data mining which produces new, nontrivial information based on the available data set. Unsupervised learning’s goal is to discover « natural » structure in the input data and there is no notion of output during the learning process compared to supervised learning where unknown dependencies are estimated from known input-output samples. For example, with classification analysis which is a supervised leaming, the output is taken from a set of known class but with clustering which is an unsupervised leaming, we don’t have a set of a priori known clusters. Therefore, only unsupervised descriptive data mining methodologies such as clustering analysis, association rules, or sorne artificial neural networks such as PART algorithm will be used.

• Sensibility to high dimensionality: As shown in [27], in a high dimensional space, the distance between every pair of points is almost the same for a broad variety of data distributions and distance functions. For example, in such condition, we can’t perform clustering in full space of all dimensions. The subspace clustering which aims to find clusters formed in subspaces of the original high dimensional space is a viable solution to the problem. Therefore, we will opt for data mining methods that are not affected by the high dimensionality of data.

• Scalability: Scalability of data mining methods isn’t a priority requirement because our system isn’t online and the quality of the extracted knowledge is more important than the responsiveness of the system. However, it is anaspect to consider in design because there exist sorne methods which offer a good balance between performance and output quality such as MAFIA [28] clustering method. This algorithm uses an adaptive grid based on the distribution of data to improve efficiency and cluster quality and introduces parallelism to improve scalability.

• Complexity (processing time): Same as with scalability, the complexity isn’t a priority requirement but it will be considered in design and during the selection of the data mining methods the same way as scalability.

CONCLUSION

This project was a good opportunity to understand the concept of subspace clustering and the impact of large set of dimensions on output clusters. We observed that even with only with 5-dimensional data sets traditional clustering methods wasn’t able to find most of the initial subspace clusters. Therefore, subspace clustering methods which are very efficient in finding clusters in different subspaces within a dataset, are preferred, against classical clustering methods, to be used in data crawler system. Unfortunately, during the implementation we faced up sorne obstacles. The first Matlab implementation of PART neural network algorithm which behaved properly with low dimensional and with fewer data sets but with higher dimensional size (> 100 dimensions) and with number of instances more than 5000, we couldn’t get any results because of memory overload. Therefore, we decided to implement PART algorithm with Java programming language, which offers more flexibility in memory management. Also, the simulation time was considerably reduced and the source code in Java can be used later in the data crawler system implementation. Our experimental procedure had sorne minor deficiency. For example, random entry for each data input is better experimental practice than use of each data input sequentially as we did. Also, we should compare the input-output clusters in basis of their dimensions and their anchor point (center point). However, as mentioned earlier, our goal wasn’t to test the methods itselfbut to study their possible use in our data crawler system. As shown in article [51], there are many other subspace clustering methods such as MAFIA, CLTree, Cell-based Clustering Method (CBF), which don’t require prior knowledge related to the output cluster’s form like PART algorithm. It will be interesting to do further studying for implementing those methods in the data crawler system.

|

Table des matières

SOMMAIRE

ABSTRACT

TABLE OF CONTENTS

Page

ACKNOWLEDGEMENT

LIST OF TABLES

LIST OF FIGURES

LIST OF ALGORITHMS

ACRONYMS

INTRODUCTION

1.1 Data Mining Concepts

1.1.1 What is Data Mining?

1.1.2 How to automate data mining process?

1.2 Agents Theories

1.2.1 What is an agent?

1.3 Agents in data mining: Issues and benefits

1.4 Objectives

CHAPTER 1 STATE OF THE ART

CHAPTER 2 METHODOLOGY

2.1 PASSI Methodology Description

2.1.1 System Requirements Model

2.1.2 Agent Society Model

2.1.3 Agent Implementation Model

2.1.4 Code Model

2.1.5 Deployment Model

CHAPTER 3 KNOWLEDGE REPRESENTATION

3.1 RDF- Resource Description Framework

CHAPTER 4 DATABASE ISSUES

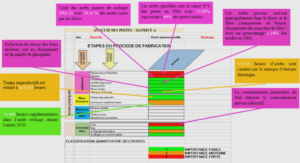

CHAPTER 5 DATA MINING PROCESS ANALYSIS

5.1 Step 1 – Define the problem

5.2 Step 2- Understand the data

5 .2.1 Identify inappropriate and suspicious attributes

5.2.2 Select the most appropriate attribute representation

5.2.3 Create derived attributes

5.2.4 Choose an optimal subset of attributes

5.3 Step 3- Prepare the data

5.4 Step 4- Estimate the model

5 .4.1 « Estimate the mode l » process flow

5.4.2 Decision Trees

5.4.3 Association Rules

5.4.4 Clustering

5.5 Step5- Interpret the model

CHAPTER 6 DATA MINING METHODS SELECTION

CHAPTER 7 DATA CRAWLER ARCHITECTURE

CHAPTER 8 EXPERIMENTS AND RESULTS

CONCLUSION AND FUTURE WORK

APPENDIX 1

APPENDIX2

REFERENCES

![]() Télécharger le rapport complet

Télécharger le rapport complet