Micro-architectural simulation of ARM cores

To evaluate the capability of micro-architectural code adaption of the proposed run-time auto-tuning approach, I developed a simulator that can estimate the performance and energy consumption of heterogeneous ARM cores. Most of the previous work studying core asymmetry employed other ISAs instead of ARM. In the embedded context the ARM ISA is widely used.

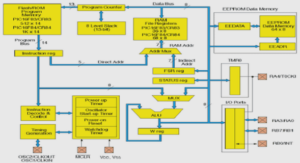

However, in the beginning of this thesis, a simulation framework of power and performance of inorder and out-of-order ARM cores did not exist. The gem5 and McPAT simulators were taken as the starting point for the framework. Therefore, this thesis contributes by describing in detail a simulation platform for heterogeneous in-order and out-of-order ARM cores. I enhanced gem5 to better model cores from the ARM Cortex-A series and McPAT to better model core heterogeneity. The simulation framework was validated in two phases: the performance estimations were compared to real ARM hardware, and the energy estimations were validated in a relative way by simulating the energy and performance trade-offs of big.LITTLE CPUs.

This thesis demonstrates that gem5 can simulate ARM cores with considerable lower timing errors than similar micro-architectural simulators, and that a simple McPAT model extension provides accurate area estimation and acceptable energy/performance trade-offs estimations of big.LITTLE CPUs, when coupled to gem5.

Thesis organization

This thesis begins by presenting the state of the art in Chapter 2, which surveys the following topics: the energy reduction techniques integrated into compilers; micro-architectural simulators of embedded processors; run-time code generation, optimization and specialization; and online auto-tuning.

Chapter 3 details the development and validation of a micro-architectural simulator of heterogeneous ARM cores, based on the simulation frameworks gem5, for performance, and McPAT, for power and area estimation. First, both simulators are presented, including the output conversion of gem5 to input for McPAT. Then, the performance, area and relative energy/performance validations against real ARM cores are described. Finally, an example of architectural/micro-architectural exploration is presented, just before discussing the scope and limitation of the proposed simulation framework and the conclusion.

Chapter 4 presents deGoal, a run-time code generator, and details its porting and validation to the ARM Thumb-2 ISA and the new features developed during this thesis. It begins by presenting the deGoal language with an example of a deGoal code compared to equivalent C source codes. Then, the porting to ARM and the new features are introduced, mostly focusing on the implementations that are needed by a run-time auto-tuner. A preliminary validation is presented, followed by experiments of dynamic code specialization. At the end of this chapter, the scope and limitations of deGoal and its validation are discussed, before the conclusion.

Chapter 5 describes the methodology and proof of concept of a run-time auto-tuning framework for embedded systems. First, I argue why run-time auto-tuners should be developed for embedded systems, following by a motivational example. Then, the methodology and experiments around two case studies in two ARM cores and 11 simulated cores are detailed. Finally, the scope, limitations and conclusion of this study are discussed.

Chapter 6 summarizes the achievements and contributions, and discusses future prospects of this thesis.

Energy reduction techniques integrated into compilers

The energy consumption can be reduced by software in several layers: drivers, operating systems, libraries, compilers and applications. This thesis is interested in dynamic approaches at the compiler level. This section begins by presenting power and energy reduction techniques in software, and then techniques integrated into compilers. Dynamic compilation historically addressed performance, its usage to reduce the energy consumption of processors is very recent [134]. As a consequence, this section mainly presents the state of the art of techniques employed in static compilers and dynamic techniques not necessarily embedded into compilers.

Energy reduction in software

The energy consumption can be reduced in software by directly acting in the dynamic or static power dissipations. As the energy consumption depends on the power and the elapsed time, speeding up the execution can also reduce the energy. First, power techniques are presented, then I argue why energy consumption was historically addressed by reducing execution time.

The ARM architecture

The ARM architecture is used in a broad range of embedded systems, from low-power micro controllers, such as the cores defined by the Cortex-M series, to high-performance embedded processors with memory management unit (MMU), as the cores defined by the Cortex-A series, which includes 32-and 64-bit processors.

Currently, there are three main instruction sets: the A64 (for 64-bit processors), A32 (a 32-bit ISA, once called ARM 32-bits) and T32 (a 32-bit ISA, with variable instruction widths). The T32 ISA, also known as Thumb-2, defines instructions whose widths are 16 or 32 bits, and extends a previous ISA called Thumb-1, which defined a 16-bit wide instructions. The A64 ISA has 31 general purpose registers and 32 SIMD registers, accessed as 128-, 64-, 32-, 16- or 8-bit registers [14, Section B1.2.1]. The A32 and T32 ISAs have 13 general purpose registers, and 32 SIMD registers of 64 bits, also aliased as SIMD registers of 128 bits, which can be viewed as 32-, 16- or 8-bit elements. Thumb-1 instructions have access to only 8 general purpose registers and support neither FP nor SIMD instructions. High performance cores from the Cortex-A series can embed FP and/or SIMD extensions, called VFP and Advanced SIMD, respectively. The Advanced SIMD architecture, implementations and supporting software is referred as NEON.

Embedded processor simulation

Simulation is mainly employed for three reasons:

• The real experimental setup is very expensive or impossible: For example in high-performance processors, obtaining reliable measurements of the behavior of core components without perturbing the circuit is impossible.

• Experimental or theoretical research: Some experiments suppose ideal conditions to simplify the analysis or to focus on the main problem. Other examples are the proof of a concept and the simulation of modified versions of existing hardware.

• Prototyping: Before synthesizing a complex circuit, prototyping through simulation shortens the process, avoiding simple project errors. First, this section introduces the abstraction levels of processor simulation. Then, the state of the art of micro-architectural ARM simulators for performance and power estimation is presented.

Abstraction levels

Depending on the accuracy and complexity of the simulated software, six main abstraction levels are typically identified and described in the following.

Run-time code optimizations

In this section, the state of the art of run-time code optimizations through specialization or autotuning is presented. This work focuses on high-performance run-time techniques comparable to statically compiled languages, therefore just-in-time (JIT) compilers that for example accelerate interpreted languages or ahead-of-time compilers are not presented. HotSpot for the Java language and V8 for JavaScript are examples of JIT compilers and Android Runtime is an example of ahead-of-time compiler, which are not surveyed here. Four fields are briefly surveyed: run-time code specialization, dynamic binary optimizations, run-time recompilation and online auto-tuning.

Run-time code specialization

Run-time code specialization is implemented by two main techniques: code generation and instruction insertion into code templates.

Run-time code generation

Dynamic code generators define specific or supersets of languages for run-time code generation. Program or data specialization are implemented by special language constructs that capture dynamic information.

Fabius [87] was proposed to dynamically generate and specialize machine code to accelerate ML programs, achieving comparable or even faster speedups than C-optimized versions. The optimizations included constant propagation, loop unrolling and strength reduction. The compiler needed programmer annotations to indicate which function arguments were going to be processed and specialized at run time. Whole functions could also be annotated to be inlined at run time. Fabius achieved a very fast code generation speed because no support for register allocation and instruction scheduling was implemented. The lack of mutable data structures in the ML language also contributed to the code generation speed, because memory aliasing analysis is not necessary.

‘C [112] and tcc implemented an ANSI C superset and compiler that allowed high-level code to be dynamically translated into machine instructions. ‘C provided two run-time systems: a one-pass code generator for generation speed and a more elaborated back-end with a simple intermediate representation (IR) for improved register allocation and code quality. The backquote or tick character (‘) indicates the beginning of a dynamically generated statement, including whole functions with dynamic number of parameters and types. Dynamically assigned variables are indicated by a preceding “$” sign, inside backquote expressions, allowing the incorporation of run time values as constants in the dynamically generated code. Static and dynamic types are allowed through special keywords.

With more abstraction and optimizing possibilities, TaskGraph [26] implements a C-like sub language for dynamic code generation, and is integrated into applications as a C++ library. It is very portable, any TaskGraph application and its dynamic code are generated by any standard C compiler. Internally, TaskGraph uses the SUIF-1 representation to perform optimizing passes. TaskGraph is highly portable, but suffers from high run-time overheads, being only adapted to long-running workloads. 2015].

Run-time recompilation

Run-time recompilation is a technique that tries to take into account run-time information to produce more optimized executable code.

Kistler and Franz [80] proposed a system for continuous program optimization. While a program runs, the recompilation system tries to find better versions running in the background. Two new optimizations were proposed, and considerable speedups were observed when they were applied. Nonetheless, the dynamic recompilation system suffered from high overheads, and the time required to pay off was at least two minutes in a PowerPC 604e.

Recently, Nuzman et al. proposed a JIT technology for C/C++ [108]. Their approach consists in delivering executable files along with an IR of the source code. In other words, an initial binary code is provided to avoid the overhead of generating it at run-time. Then, a JIT compiler is charged to profile the running code and recompile hot functions in idle cores. They claim that their work is the first to obtain performance gains from recompilation of regular/short-running programs. In their main experiment, the benchmarks were compiled with moderate optimization levels, arguing that this is a realistic context in the real world. On average their approach provided speedups of 1.07 in CINT2006 benchmarks, running in a POWER7 processor.

Online auto-tuning

Auto-tuning has been used to address the complexity of desktop- and server-class systems. This approach tries to automatically find the best compiler optimizations and algorithm implementations for a given source code and target CPU. Usually, such tools need long space exploration times to find quasioptimal machine code. Only few work addressed auto-tuning at run-time.

ADAPT [135] was one of the first frameworks to dynamically optimize a running application, without prior user intervention. The dynamic compilation was performed in an external processor or in a free processor inside the computing system. In three experiments, ADAPT was only able to speedup workloads that run during more than one hour on an uniprocessor.

Active Harmony [129] is a run-time compilation and tuning framework for parallel programs. By defining a new language, programmers can express tunable parameters and their range, which are explored at run time. In experiments performed in cluster of computers, the code generation is deployed in idle machines, in parallel to the running application. Active Harmony provided average speedups between 1.11 and 1.14 in two scientific applications, run with various problem sizes and in three different platforms. IODC [45] is a framework of iterative optimization for data centers, which is transparent to the user. The main idea is to control the intensity of space exploration (recompilations and training runs) accordingly to the savings achieved since the beginning of the execution, because the execution time of the workload is assumed to be unknown. For compute intensive workload, IODC achieved an average speedup of 1.14.

SiblingRivalry [4] is a auto-tuning technique in which half of cores in multi-core processors are used to explore different auto-tuning possibilities with online learning, while the other half run the best algorithm found so far. It can both adapt code on-the-fly to changing load in the system, and to migrations between micro-architectures. In this latter case, on average this approach both speeded up by 1.8 and reduced the energy consumption of eight benchmarks by 30 %, compared to statically auto-tuning code for Intel Xeon and running in AMD Opteron, and vice-versa (reference versions were allowed to use all cores).

SIMD load and store micro-operations

I observed considerable slowdown in some NEON load and store instructions. The source of the problem was that some SIMD micro-operations had no specific operation class, being then assigned to the default one (FloatAdd).

I assigned 21 missing operation classes to better model NEON loads and stores that interact with sub-elements in a SIMD register. Examples of such instructions are VLD1.16, VLD2.16, VLD3.16 and VLD4.16, which load 1 to 4 consecutive 16-bit elements from the memory into vector registers. Table 3.2 shows the timing of some instructions with and without the improvement, and timings from the Cortex-A8 and A9 [7, 11]. Of course, one could create customized micro-operations per instruction, being more accurate at the cost of less flexibility. I decided to keep the original micro operation implementation, because I have no information of how those NEON macro-instructions should be decomposed.

cache bus width to the pipeline

In gem5, there is no core/cache parameter that allows us to set the L1-D cache bus width to the pipeline. For example, in the Cortex-A8 (32-bit core), this bus in the NEON unit is 128-bit wide, allowing to load and store up to four words per cycle [7, Section 7.2]. In gem5, only one word per cycle can be processed by a load or store unit, therefore instructions that load or store multiple registers could be considerably slowed down, such as LDM* and STM* instructions.

The number of micro-operations and hence the number of cycles required by a load or store instruction (considering cache hit) depends on its description in the ISA support of the simulator. Currently, there is no way to easily set the number of words accessed per cycle, because for each possible value the description of memory instructions should be modified.

I addressed this problem with an approximate solution: bursts of micro-operations are allowed to be processed at once, if they are part of a load or store multiple registers. The number of micro operations in a burst is limited to respect the maximum number of L1-D cache accesses per cycle. The drawback of this approach is that the front-end bandwidth may limit the flow of micro-operations that in realityshould be merged, also increasing the power dissipation. With this modification, an auto-tuner can explore the performance trade-offs of generating code with instructions that load/store a single or multiple registers.

In-order model based on the O3 CPU model

One of the hypotheses of this thesis is that run-time code adaption to a target pipeline can improve the performance and reduce the energy consumption. For energy efficiency, in-order pipelines are preferred over out-of-order designs. Hence, it would be interesting to compare the performance gap and energy benefits of the proposed run-time technique run in an in-order pipeline to a statically compiled code run in a similar out-of-order pipeline. To this aim, first I argue why I chose to modify the O3 model to mimic an in-order pipeline. Next, I explain how the O3 model was modified, then a qualitative explanation of the simulation precision of this approach is presented. Its quantitative validation is presented in Section 3.4.

|

Table des matières

I Thesis

1 Introduction

1.1 Thesis contribution

1.1.1 Run-time code generation and auto-tuning for embedded systems

1.1.2 Micro-architectural simulation of ARM cores

1.2 Thesis organization

2 State of the art

2.1 Sources of energy consumption in ICs

2.1.1 Static or leakage power

2.1.2 Dynamic power

2.2 Energy reduction techniques integrated into compilers

2.2.1 Energy reduction in software

2.2.2 Compiler techniques

2.3 The ARM architecture

2.4 Embedded processor simulation

2.4.1 Abstraction levels

2.4.2 Micro-architectural performance simulation

2.4.3 Micro-architectural energy simulation

2.5 Run-time code optimizations

2.5.1 Run-time code specialization

2.5.2 Dynamic binary optimizations

2.5.3 Run-time recompilation

2.5.4 Online auto-tuning

2.6 Conclusion

3 Micro-architectural simulation of ARM processors

3.1 gem5

3.1.1 The arm_detailed configuration

3.1.2 Modeling improvements

3.1.3 In-order model based on the O3 CPU model

3.2 McPAT

3.2.1 Overview

3.2.2 Better modeling core heterogeneity

3.3 Parameters and statistics conversion from gem5 to McPAT

3.4 Performance validation

3.4.1 Reference models

3.4.2 Simulation models

3.4.3 Benchmarks

3.4.4 Accuracy evaluation of the Cortex-A models

3.4.5 In-order model behavior and improvement for a Cortex-A8

3.5 Area and relative energy/performance validation

3.5.1 Reference models

3.5.2 Simulation models

3.5.3 Benchmarks

3.5.4 Area validation

3.5.5 Relative energy/performance validation

3.6 Example of architectural/micro-architectural exploration

3.7 Scope and limitations

3.7.1 Scope

3.7.2 Limitations

3.8 Conclusion

4 Run-time code generation

4.1 deGoal: a tool to embed dynamic code generators into applications

4.1.1 Utilization workflow

4.1.2 Example of kernel implementation: C with and without SIMD intrinsics and deGoal versions

4.1.3 The Begin and End commands

4.1.4 Register allocation

4.1.5 Code generation decisions: deGoal mixed to C code

4.1.6 Branches and loops

4.2 Thesis contribution: New features and porting to ARM processors

4.2.1 Overview of contributions

4.2.2 SISD and SIMD code generation

4.2.3 Configurable instruction scheduler

4.2.4 Static and dynamic configuration

4.2.5 Further improvements and discussion

4.3 Performance analysis

4.3.1 Evaluation boards

4.3.2 Benchmarks and deGoal kernels

4.3.3 Raw performance evaluation

4.3.4 Transparent vectorization: SISD vs SIMD code generation

4.3.5 Dynamic code specialization

4.3.6 Run-time auto-tuning possibilities with deGoal

4.4 Scope and limitations

4.4.1 Scope

4.4.2 Limitations

4.5 Conclusion

5 Online auto-tuning for embedded systems

5.1 Motivational example

5.2 Methodology

5.2.1 Auto-tuning with deGoal

5.2.2 Regeneration decision and space exploration

5.2.3 Kernel evaluation and replacement

5.3 Experimental setup

5.3.1 Hardware platforms

5.3.2 Simulation platform

5.3.3 Benchmarks

5.3.4 Evaluation methodology

5.4 Experimental results

5.4.1 Real platforms

5.4.2 Simulated cores

5.4.3 Analysis with varying workload

5.4.4 Analysis of correlation between auto-tuning parameters and pipeline designs

5.5 Scope, limitations and future work

5.5.1 Scope

5.5.2 Limitations

5.5.3 Future work

5.6 Conclusion

6 Conclusion and prospects

6.1 Achievements

6.1.1 Embedded core simulation with gem5 and McPAT

6.1.2 Run-time code generation and auto-tuning for embedded systems

6.1.3 Summary of achievements

6.1.4 Amount of work

6.2 Prospects

II Appendix

A gem5 to McPAT conversion tables

List of figures

List of tables

Bibliography

Personal bibliography

Glossary

Résumé étendu